When people ask me what I do for a living I give up trying to explain what it is and just say ‘software’, at which point their eyes glaze over and the conversation shifts to weather, which politician has done what insanely hypocritical thing and whose round it is.

And the reason I find it hard to elucidate what I do for a living is that it’s hard to explain just what a tech-mad solution architect does; I stay at just-behind the cutting edge of open source software, one foot in the camp of supported versions (the Red Hat model) and the other constantly dipping my toes into the ‘new stuff’ that is coming.

When Docker first came out my thoughts were, in chronological order, ‘I don’t understand this’, ‘that’s a nice little idea’, ‘that’s a stunning idea’, that’s the future’ in that order (although ‘I don’t understand this’ did pop up a lot more).

And then Kubernetes came on the scene. And with a good deal of clever forethought, Red Hat and the Open Source communities dropped their ‘super-controllers’ around Docker and other segmentation/containerisation technologies, and jumped wholeheartedly on the Kubernetes bandwagon (or should that be sailing ship, keeping true to the Greek taxonomy for the project).

If you’ve read any other of the posts you know my day-to-day job revolves around knowing OpenShift inside and out, from a perspective of why it is Enterprise strength etc etc. And part of that role has led me to get an understanding of what Kubernetes actually is.

I’ve blogged on the fundamentals of Kubernetes before, but I’m going to reiterate my simple explanation because it is completely relevant to what I want to enthuse about in the post, namely a little prototype called KCP that does something……..brilliant.

So, Kubernetes is a Container Orchestration system. And that is the worst description I will ever type around Kubernetes; saying that is like saying a Ferrari is four tyres and a chunk of metal. It describes it perfectly while missing the point; you don’t buy a Ferrari for four tyres and a chunk of metal. You buy a Ferrari (if you’re not a software engineer/solution architect and can actually afford one) because of it’s elegance, it’s sophistication, and the crafting and engineering that make it much more than four tyres and a chunk of metal.

So, Kubernetes is, at its heart, a reconciliation based state machine. In actuality it’s two systems; one is a ‘virtual’ system, comprising the creation and manipulation of ‘objects’, and one is a physical one, where the representations of the virtual objects are instantiated and kept compliant by ‘drones’ driven by changes in the object model.

In English; when you interact with a Kubernetes system you challenge it to keep a set of required states. Kubernetes balances the physical instantiation of the Objects with the required state of the Objects held centrally.

At its heart that is it; the map of Object model to state is kept centrally (in etcd) and manipulated via a control plane, where the Objects have their own dedicated controllers whose job is to task physical instantiators (the kubelets that live on the Worker nodes) to realise and keep compliant the required state.

If the physical instantiations change, i.e the Pod fails, then the controller, in tandem with the Kubelet, will try and restore the required state. The lovely thing about this is the disconnect; the control plane owns the intended Object state, the Kubelets resolve and report.

I have digressed but I hope you get the point; Kubernetes is a brain and a set of physical points that are disconnectedly ‘fire and forget for now’ updated, then respond with state changes to the brain which decides if the state has been resolved or not.

The thing is this; it’s brilliant and it is 100% linked to Containers/Pods. The Kubelets just handle the orchestration and health of Pods on their node. And this is where KCP comes in.

So KCP is effectively the brain unhindered by the physicality of Kubernetes. And that’s a great think; it’s the control plane with all its brilliant ability to reconcile and maintain Object state, but it is not limited to the physicality of orchestrating Pods.

And this is completely my take from my understanding of the Kubernetes mechanisms and what I’ve seen from the KCP project.

Why is this brilliant? Because it means you can use that disconnected two step reconciliation approach for anything you can write an end controller for.

To me the goal of KCP is to provide that for any type of system that can be reached; imagine a pseudo-kubelet that provides orchestration over, say, a set of autonomous robots. You will be able to use KCP to control, reconcile and ensure compliance of the end state of the robots.

This disconnection of the ‘brain’ side from the physical realisation side means the sky is literally the limit in terms of what you could eventually control with a KCP. Anything that requires a defined state and a compliance can be architected to behave like a Kubelet, and then controlled by KCP.

I really like that idea – the KCP project is very young and is currently just a prototype and a (comprehensive) list of targets, but my gut says it will be a very interesting thing to follow.

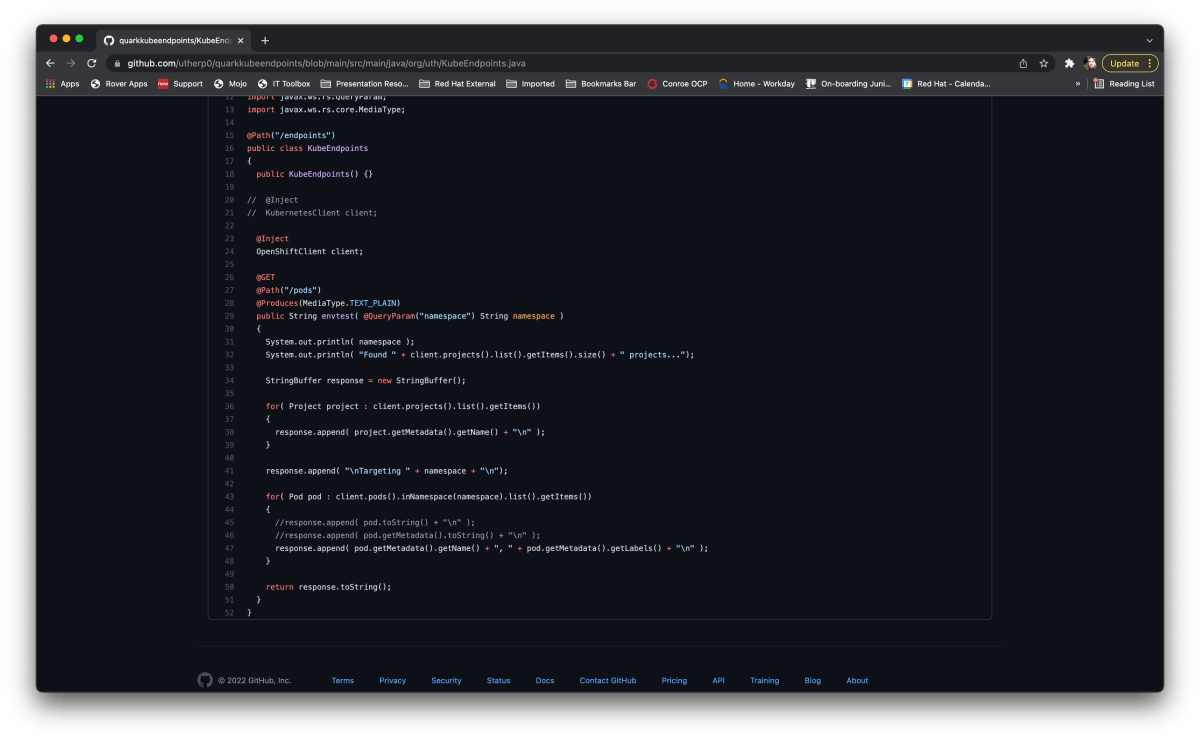

The current git repo for the project is at https://github.com/kcp-dev/kcp and the goals/roadmap is at https://github.com/kcp-dev/kcp/blob/main/GOALS.md – have a read and see what you think…